I have a sneaky suspicion that AI detectors are nothing but guesswork and smoke & mirrors. They are meant to detect AI-generated content and have quickly become the internets gatekeepers of authenticity.

This research started a couple of weeks ago when a customer contacted us asking why undetectable.ai was showing that pieces of content we had written for them were showing as AI generated. This kind of took the team by surprise as nothing for that customer was AI generated and has lead us down a rabbit hole that really needs looking at.

How reliable are these gatekeepers, really? How can we know to trust their output when it is contradicted in multiple places. To find out, we conducted a simple experiment: we generated in AI a descriptive product review and ran it through a series of popular AI detectors.

This article explores the inconsistencies we uncovered and what this might mean for content creators, educators, and professionals. We really need to know if AI detection is currently more guesswork than science.

Now you’d expect that being as we knew it was AI generated completely from a very basic prompt it “should” show as 100% AI in all detectors.

The results? Wildly inconsistent.

| Detector | Result |

|---|---|

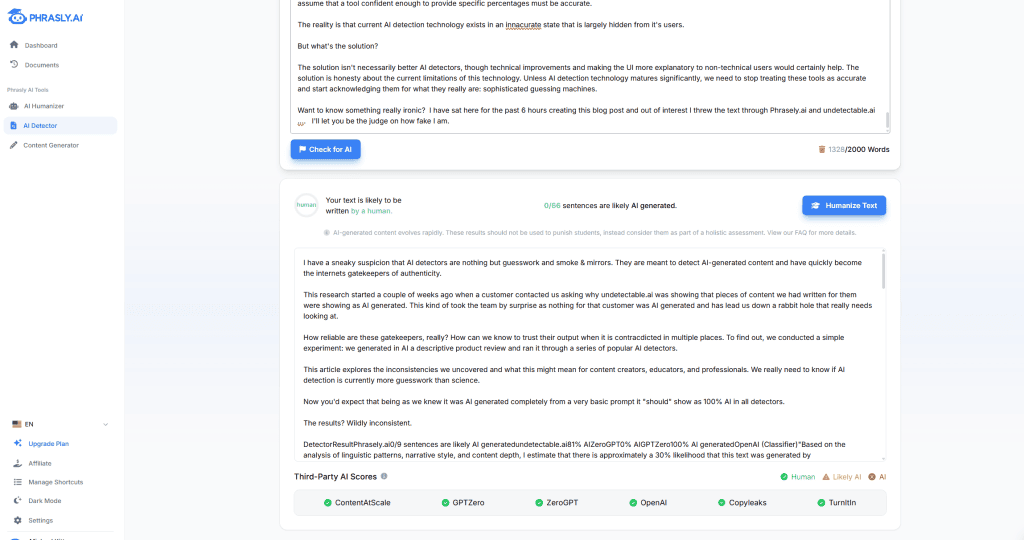

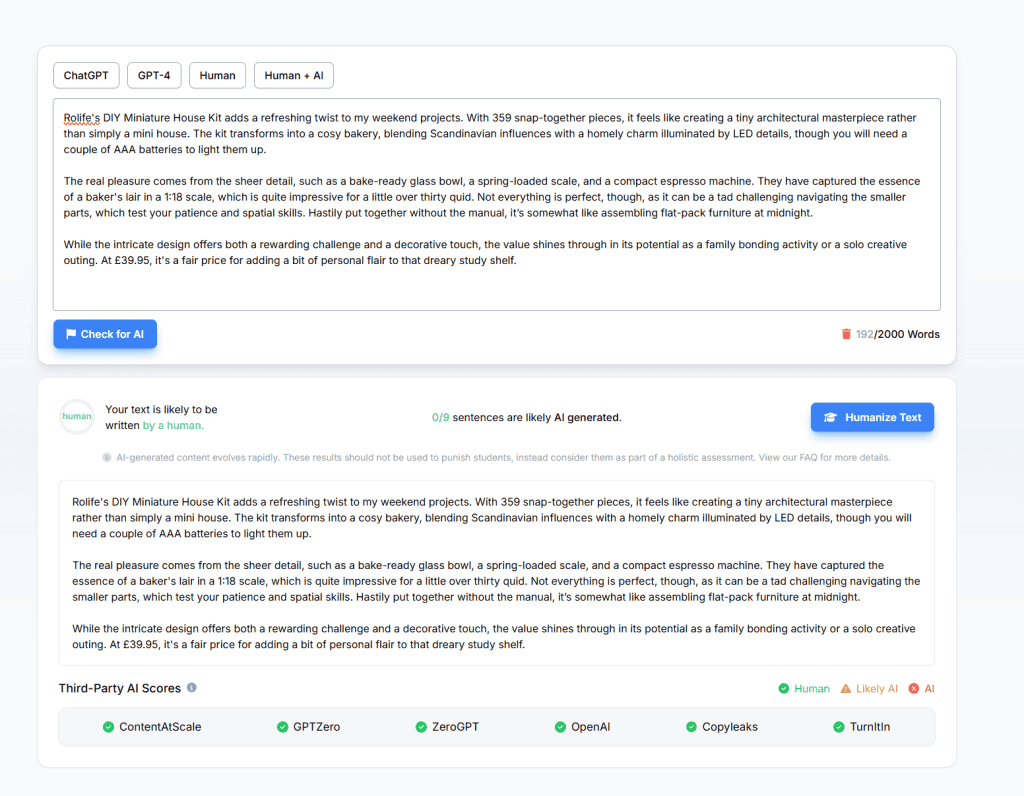

| Phrasely.ai | 0/9 sentences are likely AI generated |

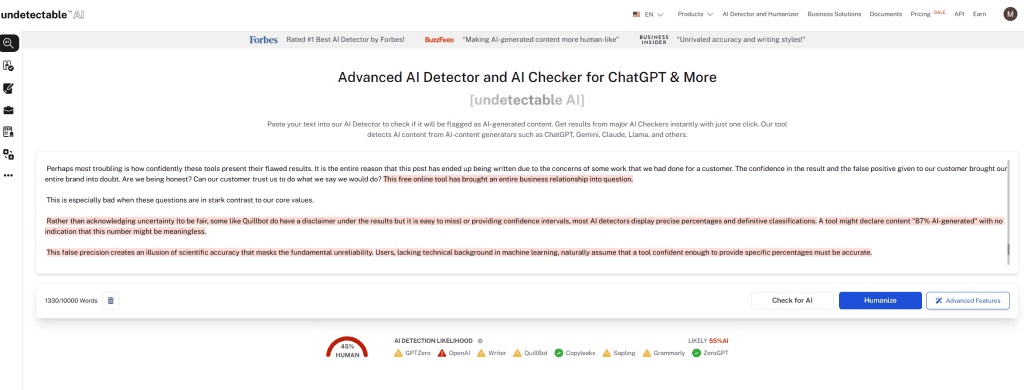

| undetectable.ai | 81% AI |

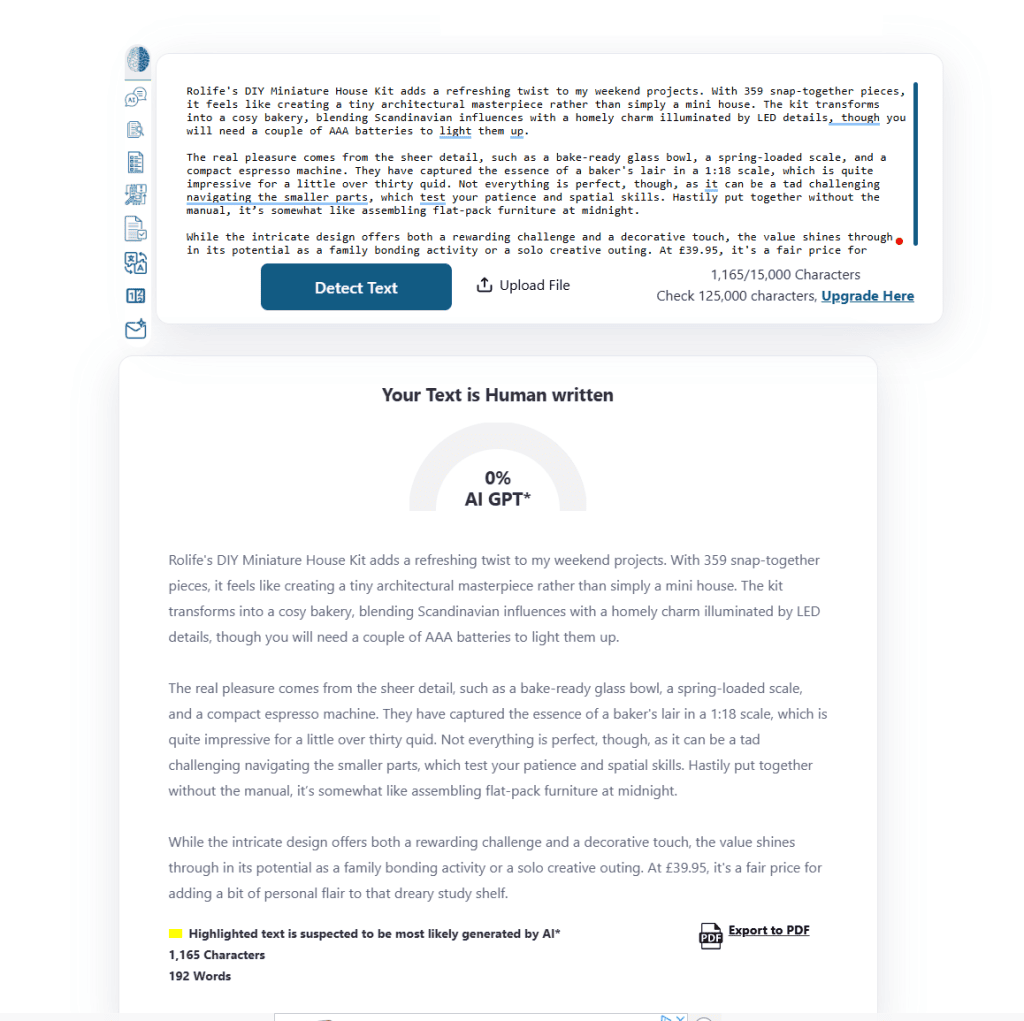

| ZeroGPT | 0% AI |

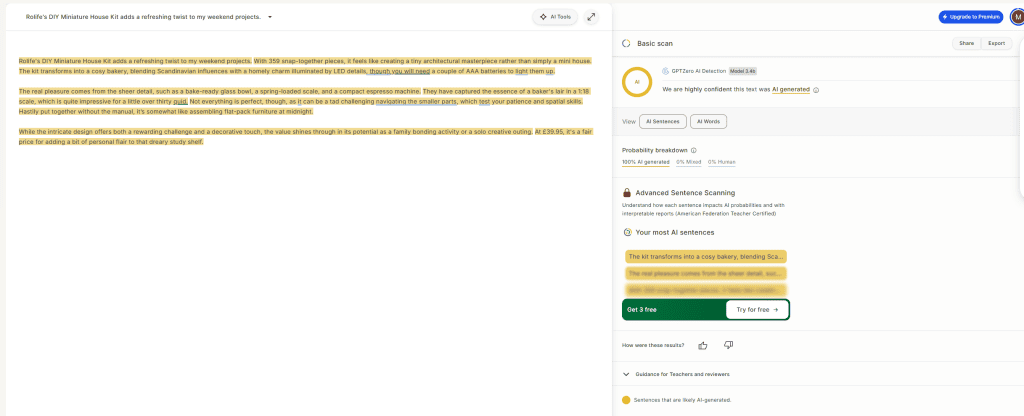

| GPTZero | 100% AI generated |

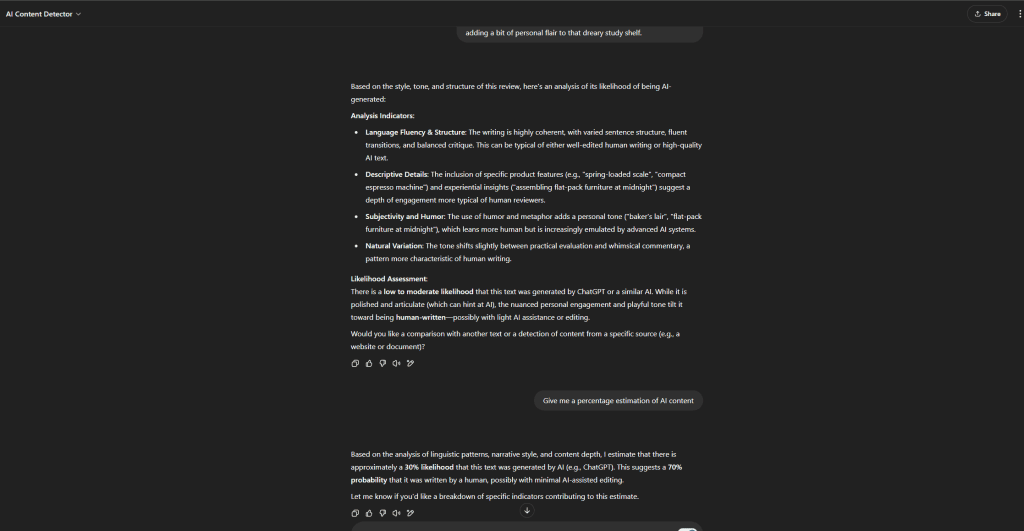

| OpenAI (Classifier) | “Based on the analysis of linguistic patterns, narrative style, and content depth, I estimate that there is approximately a 30% likelihood that this text was generated by AI” |

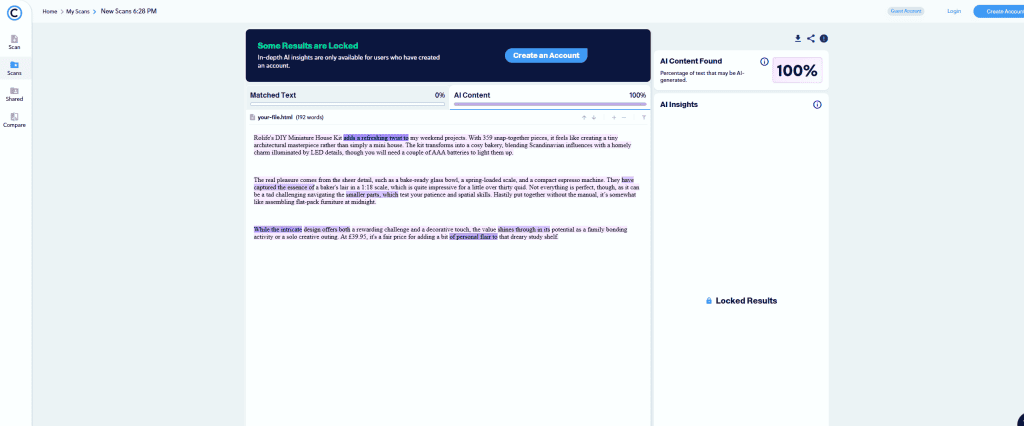

| Copyleaks | 100% AI |

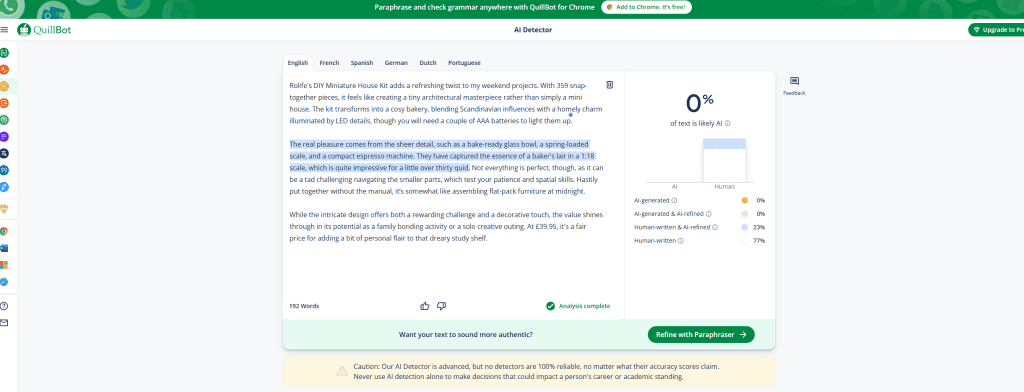

| Quillbot | 0% AI |

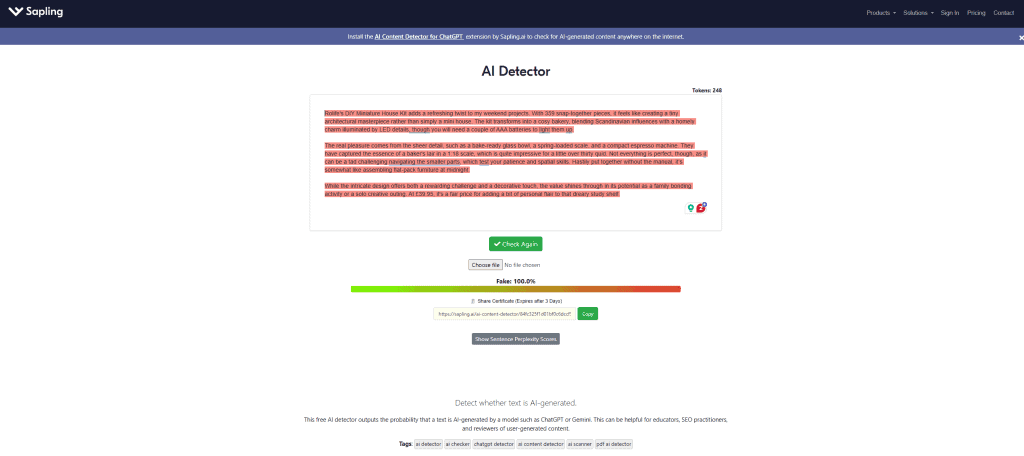

| Sapling | 100% AI |

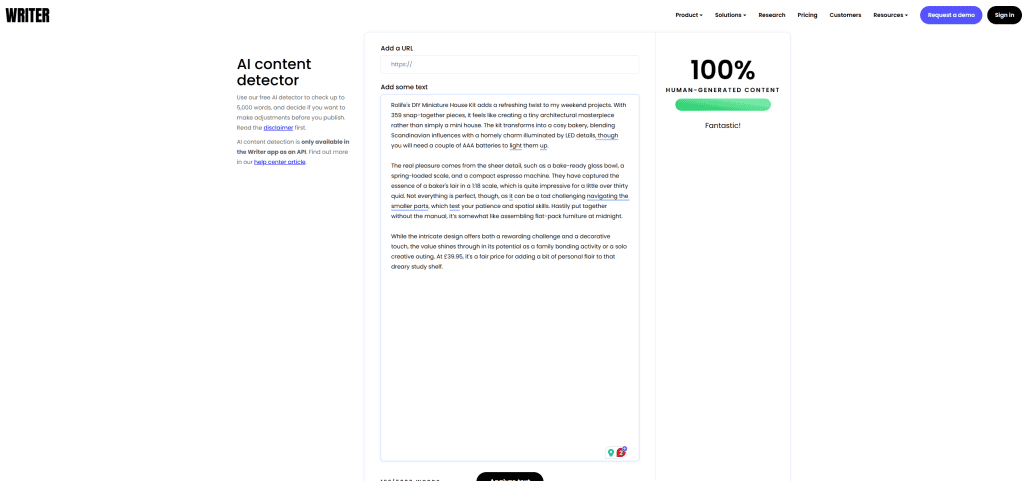

| Writer | 0% AI |

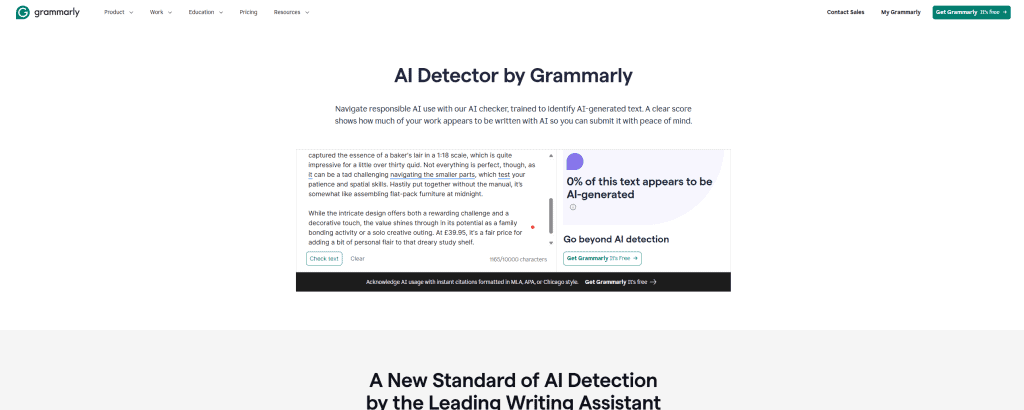

| Grammarly | 0% AI |

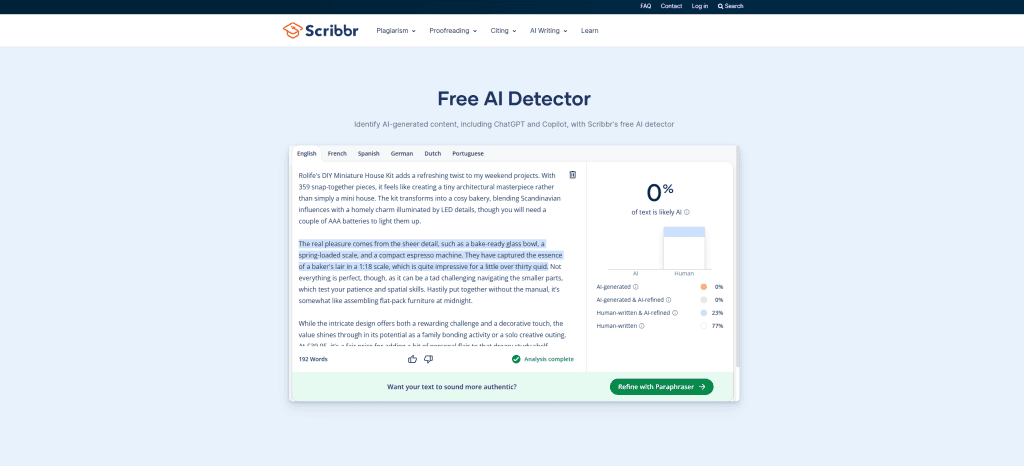

| Scribbr | 0% AI |

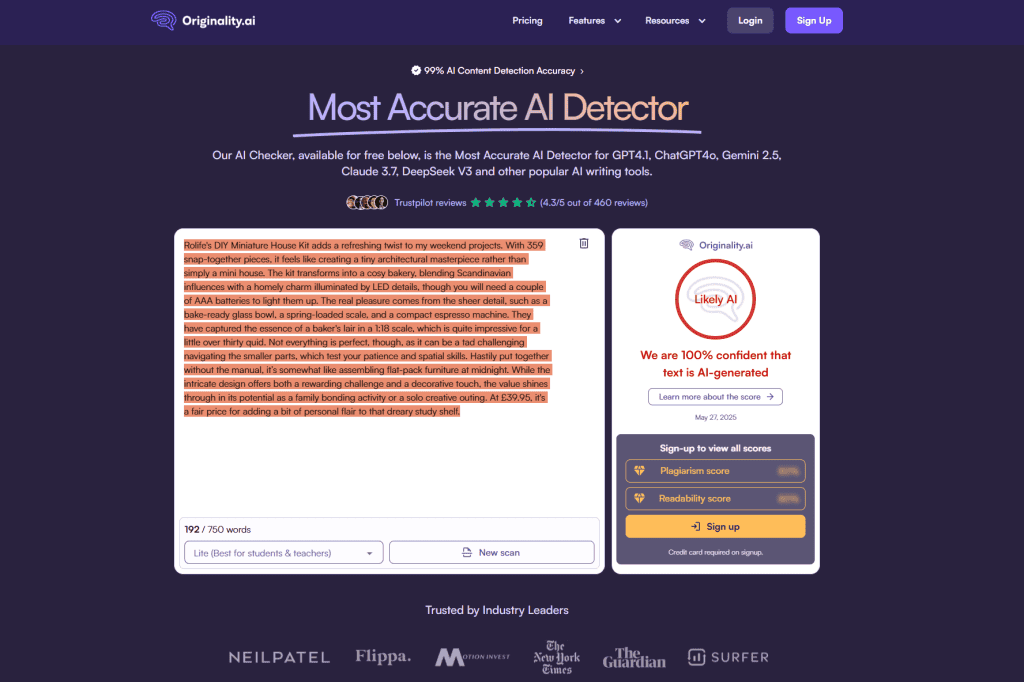

| originality.ai | 100% AI |

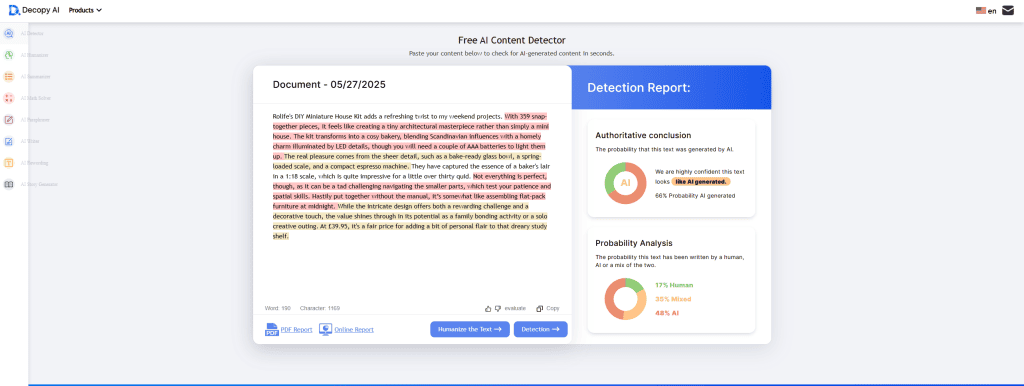

| Decopy AI | 66% AI |

| aidetector.com | 0% likely AI generated |

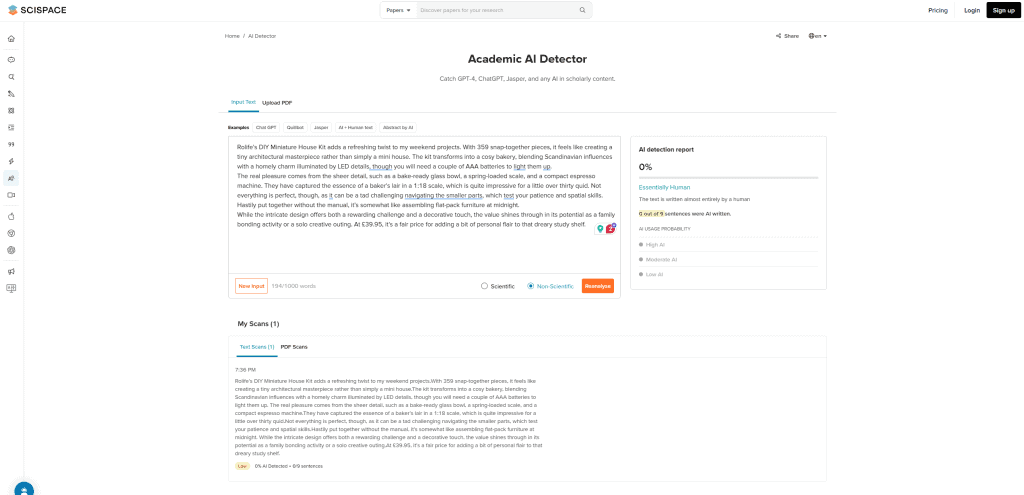

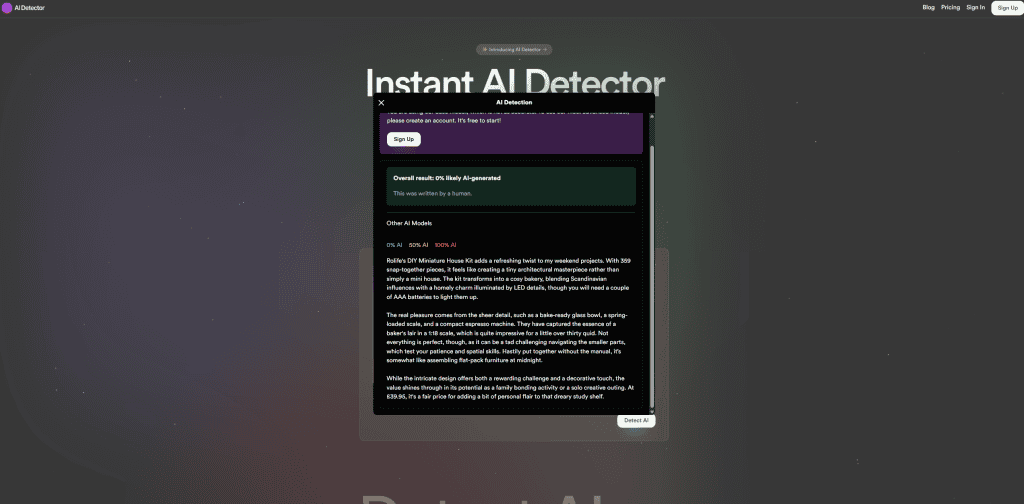

| scispace | 0% AI |

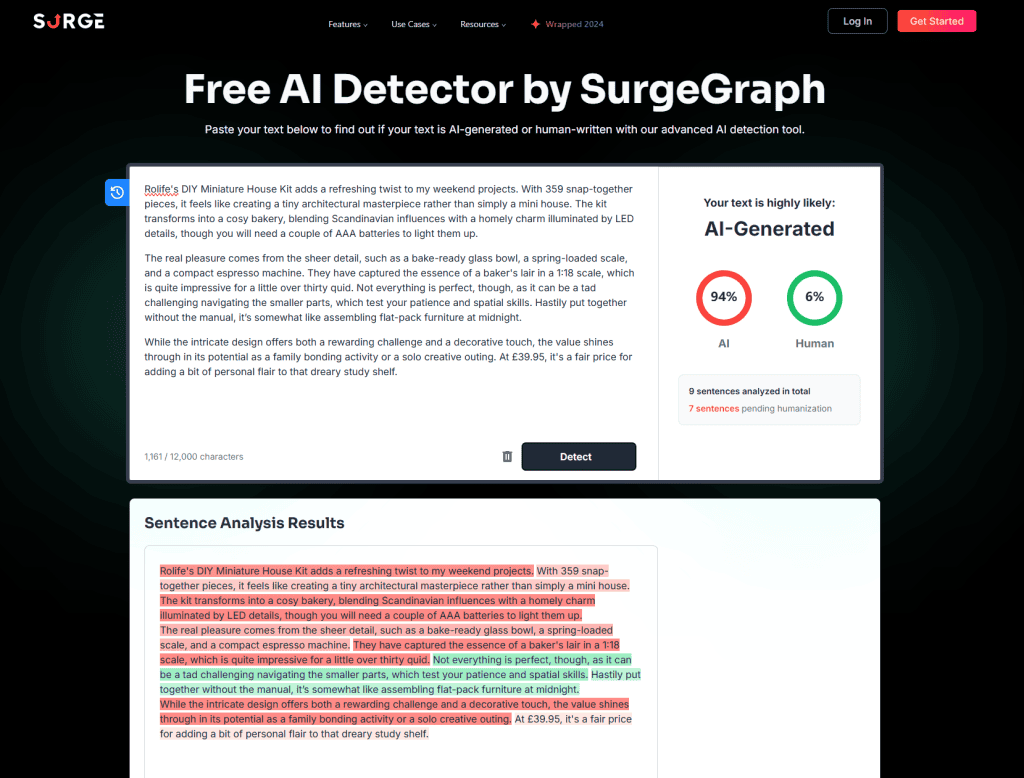

| surgegraph.io | 94% AI |

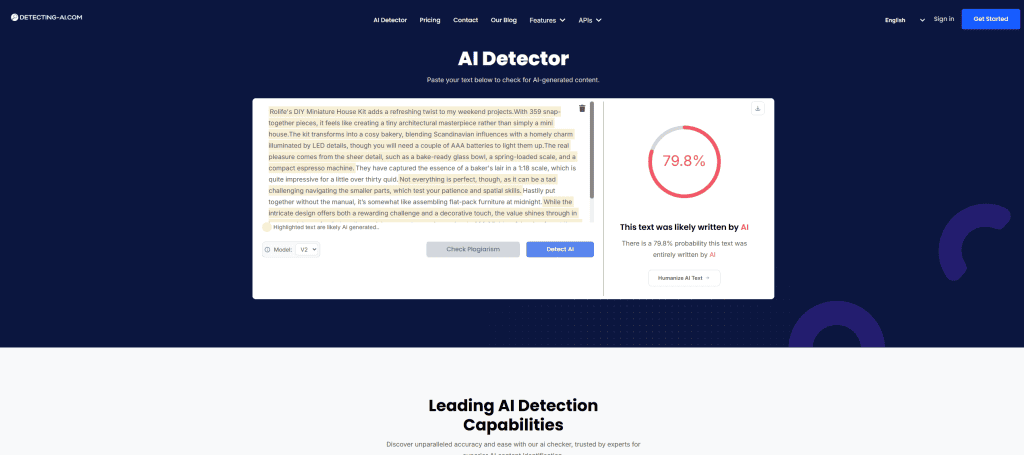

| detecting-ai.com | 79.8% AI |

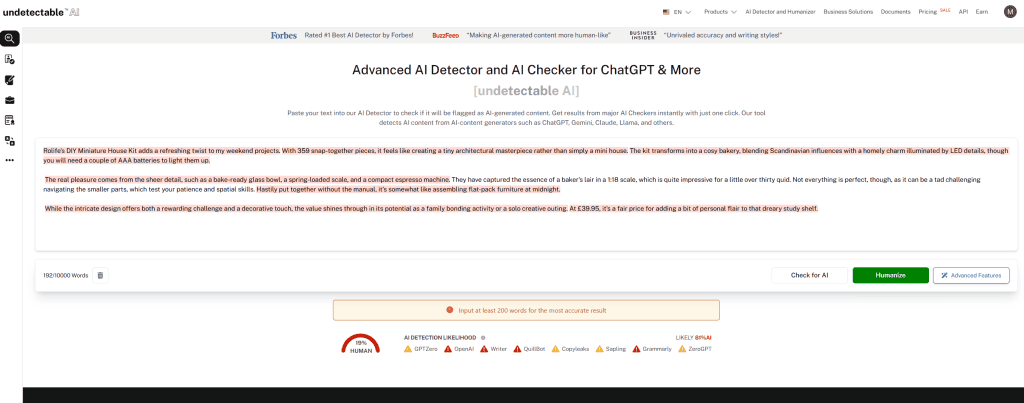

All the screenshots from the test are below

The numbers don’t lie, even if the detectors apparently do. Out of 17 tools tested, only 4 correctly identified our AI-generated content as 100% artificial. I’m pretty sure that any other QA or testing system with that accuracy would be removed immediately.

The remaining 13 detectors offered an array of confidence scores. One marked the content as 79.8% human-written, others suggested it was 30% AI-generated, and a surprising amount confidently declared it entirely human-authored.

This feel more than just a minor calibration issue it’s more like a fundamental breakdown in the core promise these tools make to their users.

I don’t know about you but a 23.5% success rate on something that could be used to restrict student grades or potentially open a business up to claims that work done was not by a human is eye-opening.

The Methodology Behind Our Madness

Our experiment was deliberately simple to avoid any variables that might skew results. We used ChatGPT to generate a product review based on a random Amazon product that we scraped the product details and existing reviews. The content was straightforward, informative, and typical of what you’d find on any e-commerce platform.

We then fed this identical text through 17 different AI detection tools, ranging from free online checkers to premium services. The platforms included all the popular ones like phrasely.ai, Copyleaks, Grammarly, GPTZero, and numerous others that appear in Google’s search results for “AI content detector.”

What we discovered was nothing short of a chaotic landscape of contradictory verdicts

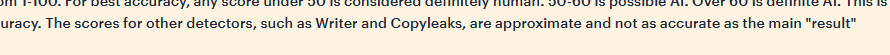

But is goes deeper. In the API documentation of undetectable.ai it has the following:

They literally state that the scores they show from external platforms are a guess approximate. And yet they promote that they should be trusted to tell people whether a piece of content is AI or not

If these tools are supposed to offer certainty in the age of synthetic text, they’ve done the exact opposite.

The Technical Smoke and Mirrors

To understand why AI detectors fail so spectacularly, we would need to examine how they actually work, except we can’t because it is another closed black box like the LLM’s themselves. Most current detection tools seem to rely on statistical analysis of text patterns, looking for characteristics they’ve learned to associate with AI generation. The aggregators seem so just use the API’s of the others and assume the response is accurate.

The problem is that factors like sentence structure variety, vocabulary diversity, punctuation patterns, and stylistic consistency aren’t exclusive to AI writing. Humans can write in patterns that trigger AI flags, especially when writing professionally or following style guidelines.

I never even noticed an “em dash” before probably 2022 but sure enough they are in every book I read that was published before this AI boom.

The Perplexity Problem

Many AI detectors rely heavily on perplexity scores which are essentially just measuring how “surprised” a language model would be by each word choice in the text.

Low perplexity (predictable word choices) supposedly can indicate AI generation, while high perplexity (unexpected word choices) suggests human authorship.

But this approach has fundamental flaws. Skilled human writers often make predictable word choices, especially when clarity is the goal. Technical writing, instruction manuals, blog content, marketing collateral and formal business communication are typically low perplexity pieces of work.

The Training Data Trap

Another critical issue will lie in the training data used to build these detectors. Most AI detectors are trained on datasets comparing known AI outputs from older models (like GPT-3 or early GPT-4) against human writing samples. As AI models evolve rapidly, the characteristics these detectors learned to identify become outdated.

GPT-4o, Claude 4, and other current models produce text that’s qualitatively different from their predecessors. They’ve been trained to avoid many of the telltale patterns that earlier detectors learned to identify. This creates a constant arms race where detection tools are always fighting the last war.

Moreover, the human writing samples used in training datasets often come from specific sources like academic papers, news articles etc. This could create an unintended bias where detectors flag writing styles that don’t match their training data as “AI-generated”, even when produced by humans from different backgrounds, cultures, styles or writing contexts.

It could be the case that AI writing is becoming indistiguisable from human written and thus AI detectors will eventually not be able to ever tell the difference and we will simply have to live in a world of assuming everything is at least partially AI.

The Confidence Game

Perhaps most troubling is how confidently these tools present their flawed results. It is the entire reason that this post has ended up being written due to the concerns of some work that we had done for a customer. The confidence in the result and the false positive given to our customer brought our entire brand into doubt. Are we being honest? Can our customer trust us to do what we say we would do? This free online tool has brought an entire business relationship into question.

This is especially bad when these questions are in stark contrast to our core values.

Rather than acknowledging uncertainty (to be fair, some like Quillbot do have a disclaimer under the results but it is easy to miss) or providing confidence intervals, most AI detectors display precise percentages and definitive classifications. A tool might declare content “87% AI-generated” with no indication that this number might be meaningless.

This false precision creates an illusion of scientific accuracy that masks the fundamental unreliability. Users, lacking technical background in machine learning, naturally assume that a tool confident enough to provide specific percentages must be accurate.

The reality is that current AI detection technology exists in an innacurate state that is largely hidden from it’s users.

But what’s the solution?

The solution isn’t necessarily better AI detectors, though technical improvements and making the UI more explanatory to non-technical users would certainly help. The solution is honesty about the current limitations of this technology. Unless AI detection technology matures significantly, we need to stop treating these tools as accurate and start acknowledging them for what they really are: sophisticated guessing machines.

Want to know something really ironic? I have sat here for the past 6 hours writing this blog post and out of interest I threw the text through Phrasely.ai and undetectable.ai …. I’ll let you be the judge on how fake I am.